[Free Credits Giveaway!] From Sheet Music to Soundworlds: The Delphos Approach to Modern Sound Creation

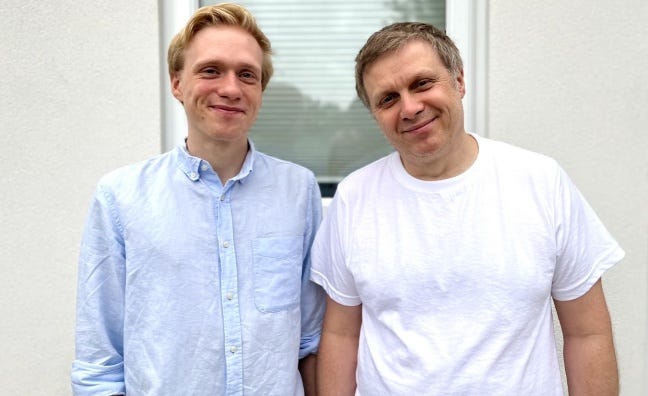

How a Father-Son Duo is Empowering Artists and Labels with Customisable AI Music Models.

From choirs to conducting the future of music creation, Ilya is an entrepreneur blending art and technology in a way that’s changing the game. With a background in mathematics and a passion for music, he and his father co-founded Delphos, an AI-driven music venture that’s offering something truly unique and valuable. Forget about algorithms churning out generic tunes—this is about empowering artists and rights holders to scale their catalogues, all while keeping a firm grip on their copyright.

During the conversation, we discussed Ilya's journey, how Delphos's tech can elevate artists while protecting copyrights, and the future of AI in music creation.

About Delphos:

Delphos works by allowing users to create their own standalone music generator called a ‘Soundworld’ into which they can load their existing ‘stems’ and use AI learning tools to compose, remix, generate beats and more.

In addition, individual Soundworlds can also be accessed by other creators for a fee, creating new revenue streams for artists, songwriters and producers without compromising their copyrights.

Thanks for taking the time out to talk today. Let's start off with a bit of background about yourself. What made you want to get into the Music AI space? What experiences led you to founding Delphos?

I started off studying mathematics at UCL from 2012 to 2016. I'm also a singer and choir conductor. I still do a little bit of that. I worked in a couple of music tech start-ups after uni, and that's where I started really getting into music data storage and manipulation, exploring what you can do with music. Around this time, Aiva became quite big. That's quite a while ago.

My co-founder, who's my dad, and I started our first start-up around then. It was related to sheet music. We really liked the idea of arranging sheet music, especially for choirs, which was the industry I was working in. We built a simple web app called Orarion. I mean, I say simple, but it took us a very long time to make because any music tech, unless it's just basic audio manipulation, is a really difficult task. Anything symbolic with music looks deceptively simple.

We did that, and the goal was to see if we could make a product for ourselves, from an idea to an actual working product. We did that, but it didn't take off as a business. It was the first commercial app aimed at the Orthodox Christian community, and everyone was like, "What do you mean we have to pay to use this? Church is free." After a while, we decided to start another venture called Delphos.

Originally, it was going to be an operating system for people making music apps. We built a few things for clients, like an app for teaching piano, and we've done a few vocal apps over the years. But with the NFT craze taking off, we realised people really like creating their own things and scaling them. When people were doing all these Yacht Club-type projects, they weren't necessarily jazzed about the crypto aspect or making money. They knew they weren't going to become billionaires. They liked setting something up and watching it replicate 10,000 times. We saw that people would love that in music too. Music has a strong emotional connection, and the artist-driven creativity was what we saw as valuable. Then, everyone else had the same idea and started building models, and technology caught up with it.

That's why we have Suno and Udio now, which stand on the shoulders of all the tech developed back then. We had a different idea. We knew from the beginning that none of this would be worth anything unless the output could be copyrighted.

A few years ago, there was a big case with Kris Kashtanova, who did a comic book where all the pictures were AI-generated. She tried to copyright it, but the court ruled that the pictures weren't copyrightable. You can only copyright the words on the original page. This is key because with large models using lots of data, it's difficult to tell what came from the original source.

There are companies claiming they can do this, but a quick look at how these models are set up shows it's dangerous to determine the source. We knew if we were going to make this work, we needed a model with zero initial knowledge. It might be set up in a particular way—obviously, that's the secret sauce—but it had to adapt easily to new data. Pre-trained models you fine-tune have the same issue. The amount of output a single musician could produce might be 10 tracks or 100 tracks, but it needs to be original, not just a replication of the training data.

We built that. It took a long time, but that's how it works. You input some tracks you own the rights to, preferably in the same style. If you give it 5 to 10 tracks, it will produce something very derivative. But if you give it 100 to 200 tracks, it starts being very original. Eventually, this evolved into a product called Soundworld.

Anybody can licence their own model, feed it with their own data, and spin up a completely private instance. It doesn't communicate with any other AI model. Just because your model is good, it isn't going to improve someone else's. The key thing is, it's very commandable. You can specify the key, chord progression, BPM, and song form. You can dictate the arrangement, like starting with bass, kick, drum, and clap for the first four bars, then adding lead elements. It's very commandable because it's aimed at professional producers, record labels, and catalogues.

What are the main use cases for Delphos?

Yeah, so the primary use case is almost like a catalogue multiplier. If you're a catalogue or a record label and you've got a set of albums that are doing very well—either streaming or sync—you can train a Soundworld on these albums or even potentially the best bits of those albums, and then you can print as much of it as you want. So, for example, say you have a brief that comes in. Nothing's quite exactly what they need, but you've got this data. You feed it into the Soundworld, train it up, and then you just do what the brief says. That process takes you no time at all.

There's still very much the opportunity for a producer to be in the loop, because once you generate, you might want to just take the stems out of it and adjust the levels. This is my favourite use case because it allows the model to do everything it can do. The Soundworld can output the track as a finished master track, or as separate stems, or as a MIDI file. And at that point, because it's training on your work and you're the one who outputs it, there are literally no copyright issues.

You've literally just written a new track in a fraction of the time and a fraction of the cost. So, for record labels and catalogues, it means that they can leverage the IP that they own to the max.

When you're dealing with such small training data, you can dictate exactly how much a particular person contributes to a particular model. It becomes a lot easier to create an agreement on that output.

So, say you and I were to make a soundworld together. You contributed the drums, and I contributed chords, melody, and bassline. It becomes very easy to say from the output who did what. For example, in the Beatles, when you listen to a track, you know exactly who's doing the drums. Now, we've got a few partnerships we're actively doing right now, where we're doing this on huge catalogues.

It's packaged as an API, and all we have to do is basically add some metadata saying we've got something from these 5 or 10 artists coming into this one model. In some cases, we're just saying, "Okay, well, 17% belongs to contributor one, 15% belongs to contributor two," and divide it up that way. Sometimes you can actually be a lot more specific because we know that we sampled, for example, this thing.

And I use the word "sampled" because there's already a lot of precedent for how that works in the industry. It just comes down to simple agreements. "Here's my IP, here are the set of rights that I can give to process this."

It's a lot simpler than these very large models, which brings me to another detail. The reason we did it this way is there's a lot of talk right now about licensing training data. I'm really happy that there are big moves towards those kinds of models, but it doesn't solve a lot of issues that rights holders will have in the future.

Primarily, attribution. When you licence data from 100,000 creators, you're not going to have 100,000 creators on the outputs. You're not even going to have 100 on the outputs. Even if you do manage to create this system where you can shrink it down to whoever was actually used most likely, the issue is, how do I get out of it? You've trained on my music, and now there's a bit too much of my music out there. I don't want my brand to be diluted.

To someone used to a regular production library, that might be less of an issue. If you're doing library music, it's like, "Thank God my music is being used somewhere." But if you're a big producer and your name is attached to this, at some point you're going to want to shrink down and make your name really mean something.

There's very little you can do because you've signed those rights already. You got paid, you might be getting continual royalties from that model, but there's very little you can do about it. You can't just ask them, "Hey, retrain your entire model, just minus 75 tracks that I gave you."

Because that's way too expensive for them. The beauty of a Soundworld and these private AI generators is, as soon as you're done with the Soundworld and you don't want to do it, you just delete it. You shut it down, you wind it down.

On the Delphos side, the integrations might no longer have access to your Soundworld, but the artist is still in complete control of that. To be honest, I don't actually see it working any other way.

When you make a Soundworld, you can share it with someone else. So if you make a Soundworld, you can then pass it on to, say, me. I'm a producer, I make something and I want a jazz bassline, I just access your Soundworld. I have it generate a bassline in my tempo, in my chord progression, in whatever it is that I need it to do, but you didn't actually have to do the work yourself.

So if I get a notification that I've received a sample from Jazz, it is not cleared for distribution. I get you on the track, like on the copyright, and then it becomes clear. What's really interesting there is that people can grow their catalogue very easily.

What features or capabilities must your AI models have to perform their tasks well?

Our AI models need to represent music authentically. In the realm of AI music, the key is not just the model itself but the data it processes. Music data is highly nuanced and less homogenous compared to text or art. Authenticity is crucial for music generators. For instance, anyone can mimic the Beatles' style, but the genuine sound of the Beatles' late '60s British music is timeless because it's authentic.

At Delphos, we strive for our Soundworlds to sound genuinely authentic. We have partners who invest significant time retraining and adding new sounds to their Soundworlds, resulting in music that feels real and connects with listeners. Authenticity ensures that the AI-generated music is enjoyed and feels genuine, regardless of its origin.

Large models tend to average out over diverse datasets, losing the unique essence of specific sub-genres or artists. For example, Stride jazz, with limited music from performers like Donald Lambert, is challenging to capture accurately in large models. However, a dedicated Soundworld focused solely on artists like Lambert, Fats Waller, or Art Tatum can truly capture their distinct styles.

Are there any accepted "truths" about AI's role in music creation that you disagree with based on your discussions with musicians? What's your contrasting viewpoint and evidence?

Many musicians we work with started off being very anti-AI. There's a persistent belief that human-composed melodies are inherently more valuable than AI-composed ones. I understand this, especially in genres like sacred choral music, which is meant to be spiritually inspired.

However, this scepticism isn't new. The same resistance met electronic music and sampling. Pioneers like Daphne Oram and Delia Derbyshire faced antagonism in the '50s and '60s. Yet, sampling evolved into a respected art form, exemplified by artists like Kanye West. If something can be done well or poorly, it has value.

One "truth" I disagree with is the idea that AI personas will dominate music. While virtual artists are popular in some markets, I don't see this becoming the norm globally. Despite advances, people will continue to connect with human artists like Taylor Swift, forming emotional bonds that AI can't replicate. Our attachment to human-created art isn't going away just because making music becomes easier.

How do you see the future of AI in the music industry evolving in the next 5-10 years? Where do you envision Delphos in that future?

Currently, the music industry feels like it's in a war over copyright and regulation, with many stakeholders waiting to see what happens next. At Delphos, we frequently engage with IP lawyers from the US, UK, Europe, India, and beyond to navigate these challenges.

AI influencers often agree that while AI's potential is exciting, it also raises ethical concerns. Companies like Suno are creating music experiences and storyboarding tools, which are fantastic but different from what Delphos does.

What I hope will happen, and what I will be fighting for, is for rights holders to have the agency to control the AI narrative. If we disincentivize people from creating music, in 20 or 30 years, we might all be generating music through text prompts. What's the point of putting so much effort into creating music if it can just be replaced by someone using a fair use model?

I believe the music industry will soon realise the massive opportunities AI presents and the high cost of not embracing it. We might see significant lawsuits or a complete industry shift. However, AI also offers opportunities to indie artists that the current music industry does not. By controlling your own copyright printer, you can fully capitalise on these opportunities.

AI also offers indie artists new possibilities for self-distribution and better fan engagement. By controlling their copyright and leveraging AI tools, artists can bypass traditional industry barriers, collaborate with big names, and tell their stories more effectively.

Can you share anything about the next thing you are shipping or working on?

Yes, our next release will be a game-changer, similar to Suno’s impact on professional producers. It will let you operate Soundworlds and AI models as easily as a musical instrument, directly within your DAW. While I don’t have an exact release date, it’s coming soon, and we plan to start beta testing soon. Our partners have already created some amazing tracks with it, which is very exciting to see.

What advice would you give to aspiring entrepreneurs looking to enter the AI or music tech space?

My wife asked why I started this, and I told her it’s tough to pinpoint. For aspiring entrepreneurs in AI music, one key piece of advice is to work in the industry before launching a startup. Many successful entrepreneurs spent 10-15 years in their field, gaining essential connections and industry knowledge. I had a vision but lacked relationships, and that made early business development challenging.

In music, building relationships is crucial. The labels and companies we work with often took over a year to start collaborating, as we focused on nurturing these connections. Don’t hide what you’re doing—start building these relationships early. Engage with the industry and connect with people.

Additionally, understand who owns and controls data before you start. You don’t want to build a product and then struggle with data access. The larger companies might seem dominant, but they face their own innovation challenges.

Be prepared for setbacks and don’t give up easily. For example, when we launched the Delphos beta, Google’s MusicLM was released the same day, diverting attention from our product. The key is to persist through challenges and keep moving forward.

Is there anything else you'd like to share about Delphos or your personal journey that we haven’t covered?

One interesting take away from our work with Soundworlds is how it improves musicianship. Many of our composers and producers have said that working with Soundworlds has made them better musicians by expanding their understanding and versatility. It’s been a significant highlight for us, and I hope that when we release the tool for everyone to train their own Soundworlds, it will have the same impact.

🎉 Get 200 loops for FREE! 🎉 To celebrate the launch of Delphos Looper, we're giving you $10 worth of credits — enough for 200 loops! Just use the promo code LooperLaunch200 at checkout and start creating the exact loop you're looking for. 🎶 Don’t miss out on this limited-time offer!

Browse the Music AI Archive to find AI Tools for your Music

https://www.musicworks.ai/

Find out more about Delphos

Delphos Links: Website | LinkedIn | Twitter/X | Ilya’s LinkedIn |